Dominic Lipinski/PA Wire via ZUMA Press

Facebook announced Wednesday that it had removed a trio of networks from its platforms promoting content targeting users in nine African countries. The company says the networks have links to Yevgeniy Prigozhin, the Russian oligarch indicted by U.S. prosecutors over interfering in the 2016 presidential election. The operation illustrates how the Kremlin’s playbook on election interference and political meddling is evolving and adaptable.

The Stanford Internet Observatory at the university’s Cyber Policy Center also released a detailed analysis of the networks, noting that while Russia’s efforts to assert geopolitical strength by engaging in Africa militarily and economically are well known, “there is emerging evidence that Russian-linked companies are now active in the information space as well.”

The Stanford researchers studied seven Instagram accounts and 73 Facebook pages, concluding that the pages had made over 48,800 posts, generating 9.7 million interactions, including 1.72 million likes.

In total, the company removed over a hundred Facebook and Instagram accounts targeting audiences in Madagascar, Central African Republic, Mozambique, Democratic Republic of the Congo, Cote d’Ivoire, Cameroon, Sudan, and Libya.

“We’re taking down these Pages, Groups and accounts based on their behavior, not the content they posted,” Nathaniel Gleicher, Facebook’s head of cybersecurity policy, wrote in the company’s announcement. “In each of these cases, the people behind this activity coordinated with on another and used fake accounts to misrepresent themselves, and that was the basis for our action.”

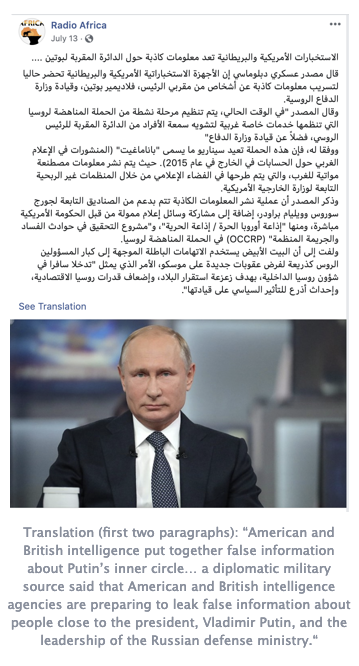

Gleicher wrote that “the people behind these networks attempted to conceal their identities and coordination,” but that the company’s investigation linked them to Prigozhin, a close Putin ally who is behind Russia’s Internet Research Agency (IRA), which was accused by the US government of creating and operating a social influence campaign as part of broader efforts to undermine the 2016 US presidential election in support of Donald Trump.

The Stanford report notes that the operation made use of Twitter accounts, WhatsApp and Telegram groups, Facebook Live video streaming, and Google Forms, and using a mixture of fake accounts and pages and ones run by contractors living in Africa.

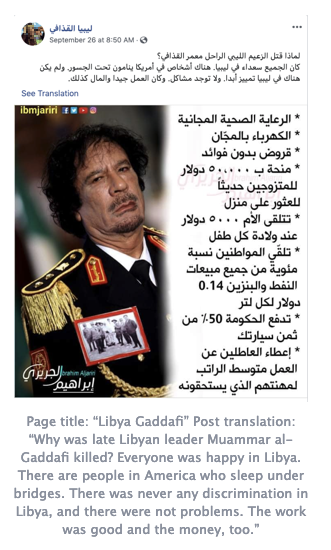

The operations in Libya, which relied on a combination of Egyptian nationals and fake accounts, focused on Libyan politics and policy matters, and portrayed themselves as journalistic efforts, sometimes playing host to multiple sides of a debate. But the pages and memes also generally supported the late dictator Moammar Qaddafi and his son, Sair al-Islam Gaddafi, along with a Russian-backed general, Khalifa Haftar.

In Sudan, the operation also mixed authentic local accounts with fakes ones posing as news organizations to comment on, post, and manage pages that generally offered a pro-Russian point of view.

“Considered as a whole, these clusters of Pages were intended to foster unity around Russia-aligned actors and politicians,” the Stanford team wrote, noting that the tactic differs from the IRA’s approach in the US, sought to exploit existing fissures in domestic political and social debates. By including local content creators, the pages targeting Africa acquired a credibility and authenticity that boosted perceptions they were an trustworthy source.

“This operation was not only a glimpse into what Prigozhin and his companies are doing in Africa but a hint as to what shapes these operations might take in the future,” they warn.