Mother Jones; Getty; photo courtesy of Megan Squire

Open one social media platform and you’re hit with a fake video; open another and you’re hit with bigotry. Open a news article, and you’ll find some victims “killed” but others “dying.” Each account of events in Israel and Palestine seems to rely on different facts. What’s clear is that misinformation, hate speech, and factual distortions are running rampant.

How do we vet what we see in such a landscape? I spoke to experts across the field of media, politics, tech, and communications about information networks around Israel’s war in Gaza. This interview, with computer scientist Megan Squire, is the first in a five-part series that also includes journalist and news analyst Dina Ibrahim, media researcher Tamara Kharroub, communications and policy scholar Ayse Lokmanoglu, and open-source intelligence pioneer Eliot Higgins.

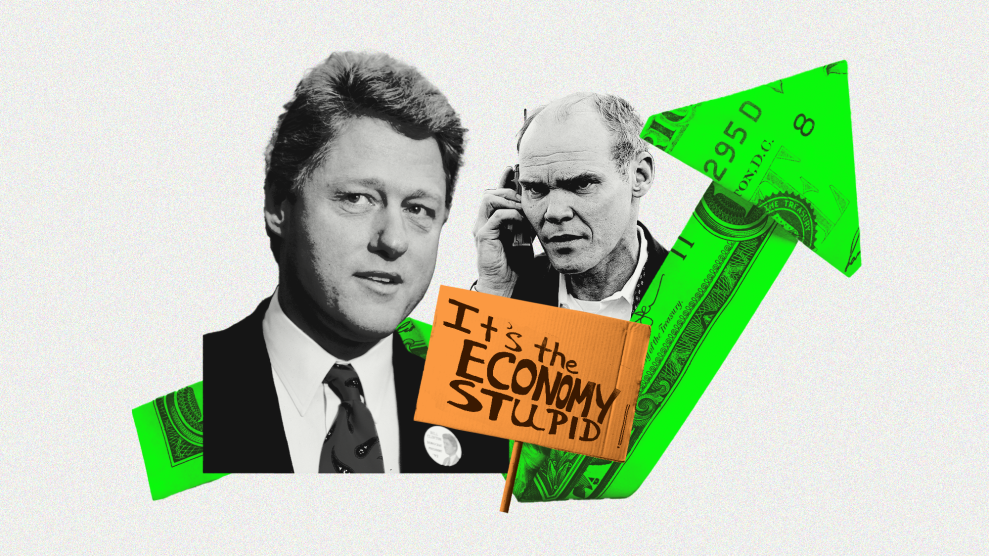

Megan Squire is the Southern Poverty Law Center’s deputy director for data analytics and open-source intelligence (OSINT). A former professor of computer science at Elon University in North Carolina, Squire now uses data science tools to track hate and extremism online. Her previous research has explored the monetization of propaganda, the rise of anti-Muslim hate on Facebook, and the role of the encrypted messaging platform Telegram in social movements and political extremism. As a Belfer Fellow at the Anti-Defamation League’s Center for Technology and Society, Squire is currently researching the effects of deplatforming extremist actors in online spaces.

The problems we’re seeing, Squire says, are not new. “In some ways, it’s gotten worse. We’re not handling this as well as we should be.”

I spoke with Squire about how social media platforms having been spreading news about Israel and Palestine—accurately, inaccurately, and sometimes dangerously.

What’s the importance of your field with respect to (mis)information, especially as pertains to Israel and Palestine?

I’m currently specializing in open-source intelligence, which means going out and using the internet, usually publicly available sources, as intelligence to research some kind of question. At the SPLC, the question is what’s going on with extremism in the US. We use intel from various places [to] help answer that question. In the context of this conflict, those same tools we use to surface information about hate and extremism are being exploited to produce propaganda and disinformation.

Sometimes we notice that disinformation about the Israel-Hamas conflict is also intersecting with the communities that we study, like antisemitic content or conspiracy theories.

Could you speak more about that rise in disinformation?

Open-source intelligence surfaces information that’s generally available publicly. We perform data analytics to try to get a sense of patterns that are emerging. With the conflict right now, there are two or three buckets of materials that we’re seeing—a lot of the same types we’d see doing [our usual] work at the SPLC to look at hate and extremism.

One is conspiracy theories. Everything from materials, videos, postings, and propaganda memes that say things like, “The attack was an inside job by Israel,” or “Joe Biden greenlit the attack.” They take a lot of different formats, but it’s spreading on social media. The importance of open-source intelligence is that we can find that information on social media and determine things like who started it, where it is, and how it’s spreading.

The second big bucket spreading on social media is video footage, particularly from old sources or unrelated footage. The misapplication of footage from other events is a very specific type of disinformation. We have tools for video analytics that we use to track how this content spreads. Related to that would be the emergence of deep-fake videos. A lot of study in open-source intelligence is trying to figure out ways we can detect if videos are fake, and head that off at the pass.

What kinds of platforms are involved?

The places where we’re seeing this whole mess emerge—there are the usual suspects, some of the common platforms, like Meta/Facebook, X/Twitter, YouTube, Reddit.

The thing with those mainstream platforms is that most at least give a nod to some kind of content moderation. We see a video, you report it as a fake, they take it down or they mark it. Some are more successful than others. X has not been as successful in tamping down misinformation because of the changes in terms of content moderation and the blue check program.

The real danger, though—and this isn’t the first time we’ve seen this in big world events—is Telegram, a platform that’s enormously popular outside the US, and enormously popular inside the US with extremist groups. It is really ground zero right now for propaganda, fake videos, and all that type of stuff. The conspiracy theories are all emerging out of Telegram and spreading from there to the other platforms.

The reason is that the platform specifically is designed for this. There isn’t effective content moderation on Telegram. They don’t aspire to do it. And even when they’re forced to do it, they don’t do it well.

The technical features of Telegram also make [it] a haven to folks that want to spread bad stuff. You can share videos or files of any kind of file up to two gigabytes. It makes it really appealing as a place to distribute content. If you think about X, you can share little videos on there, but you can’t create a propaganda library that outright.

They also have voice chat and video streaming capabilities. It has different levels of encryption, so you can have private encrypted chats. It allows for both propaganda-spreading and encrypted chats on the same platform. They have bots that will allow you to give and receive cryptocurrency for payments. You can imagine some of these groups that don’t have access to traditional finance would love that—and it’s already there, built right in.

You can do it all on one platform. It’s the perfect tool.

To clarify, information from Telegram is disseminated on other social platforms?

This is not the first time it’s happened. The first time I drew a picture of that happening using network analysis software was in 2019, regarding the Halle, Germany shooter.

The video propaganda that he made of his attack started on Telegram, and I mapped how it moved to other Telegram channels, then eventually out onto the main Internet, where it was removed. Then it came back to Telegram and spread again. This is really common. It’s almost a recipe at this point.

We are seeing increased Islamophobia and antisemitism offline. How does web-based disinformation play into that?

This is not the ’90s anymore. We don’t go onto the web, we don’t surf the web as a separate activity. We work online. We do our activities online. There’s not really that separation anymore. Online rhetoric can cause real-world harm.

That’s something that people believe, but we’re constantly looking for the proof in the pudding. I’m always trying to look for the numbers.

How can we make sure that that happens, make sure there’s causality there?

It’s something that groups like mine are very interested in tracking: We track the online harms and the offline harms, and look to see if they’re related. Does a rise in antisemitic postings correlate to a rise in antisemitic hate crimes or other types of real-world activity that may not be so flagrantly violent, such as flyering incidents or vandalism? Do we see connections between online memes directing folks to do [hate crimes], and then in the real world, actually carrying out those things?

We’re constantly tracking that, and we’re not the only groups. We’re all trying to collect enough data to make sense of it and prove that causality.

Do you have any advice for readers navigating the media landscape?

One that I give pretty consistently: If you find yourself on a platform where things feel really spicy, a lot of edgy stuff going on, that’s where a lot of content is going to be disinformative. It’s going to be potentially harmful. The more freewheeling it feels, the higher your risk is to be exposed to that exact false information people want to avoid.

The platforms that used to seem relatively benign or safe, or where you could at least carve out a space for yourself—I’m looking at Twitter, now X—right now, I wouldn’t recommend folks get their information necessarily from there. It’s just not reliable. Really think long and hard about where you’re getting your information.

This whole episode is ongoing; it is so fraught and so sad and awful. The sad part of how it’s unfolding online follows the patterns that we’ve seen before. But it’s amplified because the violence is so intense and so great.

This interview has been lightly edited and condensed for clarity.