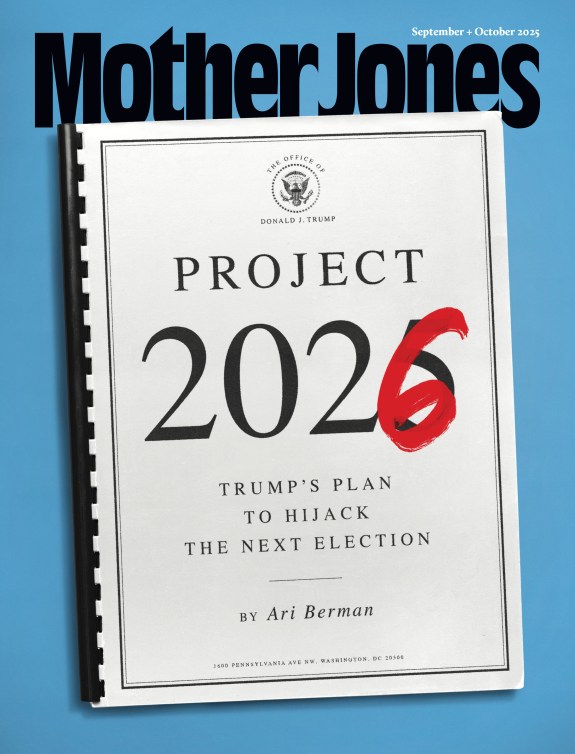

Mother Jones illustration

Russian bots and trolls on Twitter have stayed plenty busy lately. In the days before charges against three former Trump campaign officials were unsealed on Monday, pro-Russia influencers tracked by the Hamilton 68 dashboard were pushing stories on Twitter about “collusion” between Russia and Hillary Clinton—a narrative regarding a 2010 sale of uranium rights that has long since been debunked. [Editor’s note: Hamilton 68 was discontinued by its creator, the Alliance to Secure Democracy, in 2018. The group has since been criticized for refusing to disclose specifics, including which accounts it was tracking and which if any were directly Kremlin-linked. This article has been updated.] According to the nonpartisan security research project, a week’s worth of tweets from late October turned up a wave of content with “some variation on a theme of corruption, collusion, cover-up by the Clinton-led State Department and/or the Mueller-led FBI,” as well as content attacking special counsel Robert Mueller and former FBI Director James Comey. And since Friday, when news reports made clear that the special counsel’s team was moving ahead with indictments, the dashboard began registering a sharp increase in attacks specifically against Mueller.

As such propaganda campaigns continue to infect social media, researchers face a daunting challenge in diagnosing their impact. Thanks to how Facebook, Twitter, Google, and other tech giants currently operate, the underlying data from social-media platforms can easily become inaccessible. And the frustration extends to increasingly agitated congressional investigators, who will hear testimony from tech leaders on Tuesday and Wednesday concerning the Kremlin’s propaganda war on the 2016 election.

“You can launch a disinformation campaign in the digital age and then all evidence of that campaign can just disappear,” says Samantha Bradshaw, a lead researcher in Oxford University’s Computational Propaganda Project, which found that battleground states were inundated with fake news and propaganda from Russian sources in the days before Trump was elected.

Facebook recently removed access to thousands of posts by Russian-controlled accounts analyzed independently by Columbia University researcher Jonathan Albright. And while Albright told the Washington Post recently that those posts likely reached millions more than the 10 million people Facebook had originally estimated, Facebook is now set to tell Congress that Russian-linked content may have reached as many 126 million users. For its part, Google will testify that Russian agents uploaded more than 1,000 videos to YouTube.

Researchers say that links to fake news stories or YouTube videos may work one day and return an error message the next. Propaganda-spewing Twitter accounts may suddenly go dark—perhaps because the owners deactivated them, or perhaps because the accounts were flagged and the social network suspended them, as Twitter did this fall with 201 accounts discovered to be part of a Russian influence operation. Recode reported Monday that Twitter will tell Congress it has since found more than 2,700 accounts tied to the Kremlin’s Internet Research Agency.

Three senators recently introduced the bipartisan Honest Ads Act, which would require social-media companies to disclose the sources of political ads; a companion bipartisan bill also was introduced in the House. “If Boris Badenov is sitting in some troll farm in St. Petersburg pretending to be the Tennessee GOP, I think Americans have a right to know,” said Sen. Mark Warner (D-Va.), one of the bill’s sponsors.

Last week Twitter made a preemptive move to open up more data about advertising, announcing a new Advertising Transparency Center that would show the sources for all ads posted on Twitter and reveal audience-targeting information. Twitter also said it will no longer accept advertising from RT and Sputnik, two Russian-media organizations that have spent $1.9 million on Twitter ads since 2011 and were identified by the US intelligence community as key players in Russia’s 2016 election interference. On Friday, Facebook also announced plans to improve transparency for its ads ahead of the US midterm elections in 2018.

But researchers say these efforts don’t address the fact that propaganda is often spread through sock-puppet accounts or groups that produce authored posts rather than paid ads. For example, as BuzzFeed reported, the “TEN_GOP” Twitter account—which posed as the “Unofficial Twitter of Tennessee Republicans” but was revealed by Russian journalists to be operated by a Kremlin troll factory—had 136,000 followers before it was shut down. And the account was retweeted before the election by multiple Trump aides, including senior adviser Kellyanne Conway, according to the Daily Beast. Many TEN_GOP posts appeared to be authored posts rather than ads, says Ben Nimmo, a fellow at the Atlantic Council’s Digital Forensic Research Lab who recently did a deep dive into reconstructing some of the TEN_GOP tweeting history. Twitter has said that none of the 201 Russian-linked accounts it closed were registered as advertisers on the platform.

A key challenge here is that user privacy has been baked into Twitter and Facebook’s platforms since their inception. “Their first instinct was to protect people’s privacy,” Nimmo says. “And then when a malicious Russian account is found, their instinct is to get rid of it. And then you don’t have it at all. There goes a lot of evidence.”

He adds: “The platforms are between a rock and a hard place. They have to balance protecting people from the minority of anonymous malicious users with protecting the privacy of the majority of users.”

Some researchers’ attitudes toward data and privacy have begun to shift as disinformation campaigns have hit social networks. “Two years ago, I would have been arguing that data retention is bad because of privacy reasons,” Bradshaw says. “Now, I would say that some data retention is good.”

But it’s not an easy equation to solve. Nimmo recalls how the social-media networks’ anonymous accounts were celebrated as an asset to democratic movements during the Arab Spring protests in 2010. “Pro-democracy demonstrators were using anonymous accounts to organize protests because then they were safe,” he says. “Now, malicious users have figured out they can hide behind anonymous accounts as well.”

Emilio Ferrara, a University of Southern California research professor whose work showed that 1 in 5 election-related tweets last fall were generated by bots, says Twitter’s instinct to remove propaganda may be understandable, but if it involves actually deleting data, that’s problematic. “There is no need to physically destroy such content in the company’s servers. It can be just hidden from the public interface, and records should be kept for auditing purposes,” he says. “Their implementation raises critical issues on accountability that social-media service providers should be asked to address moving forward.”

A Twitter spokesperson declined to comment to Mother Jones about what types of data might be preserved, but said that the company looks forward to discussing these issues on Capitol Hill.

Lisa-Maria Neudert, another lead researcher at Oxford, believes the social-media companies, lawmakers, and researchers should be working together as they investigate the Russian influence campaign. “It is a year after the US elections and slowly we are being spoon-fed with tiny chunks of data and reveals about potential Russian election hacking,” she says. “These analyses need to speed up and engage research and government. It seems to me that just because they are occurring in a new sphere, online, they go unaddressed. Imagine a state had hacked our national TV channels for propaganda—there would be an uproar.”

For now, in order to protect research even when data disappears, Bradshaw says Oxford researchers have started capturing more screenshots from suspicious accounts. In the meantime, researchers can still make some painstaking headway with data that’s publicly available, Neudert says.

Ultimately, the question over what responsibility social-media companies should bear for disinformation may become a First Amendment debate. Leah Lievrouw, a professor at the University of California-Los Angeles who researches the intersection of media and information technologies, says the government could model regulation of the tech companies using rules that historically have governed the broadcast media or telecom industries—but she says that she sees an even higher bar for social-media companies, pertaining to the First Amendment.

“They want to avoid taking the responsibility of the cultural institutions they are,” Lievrouw says, noting that publishing and advertising companies set their own rules to safeguard the integrity of their content. “Come on, Facebook. You’re one of the big media grown-ups now.”